Project Overview

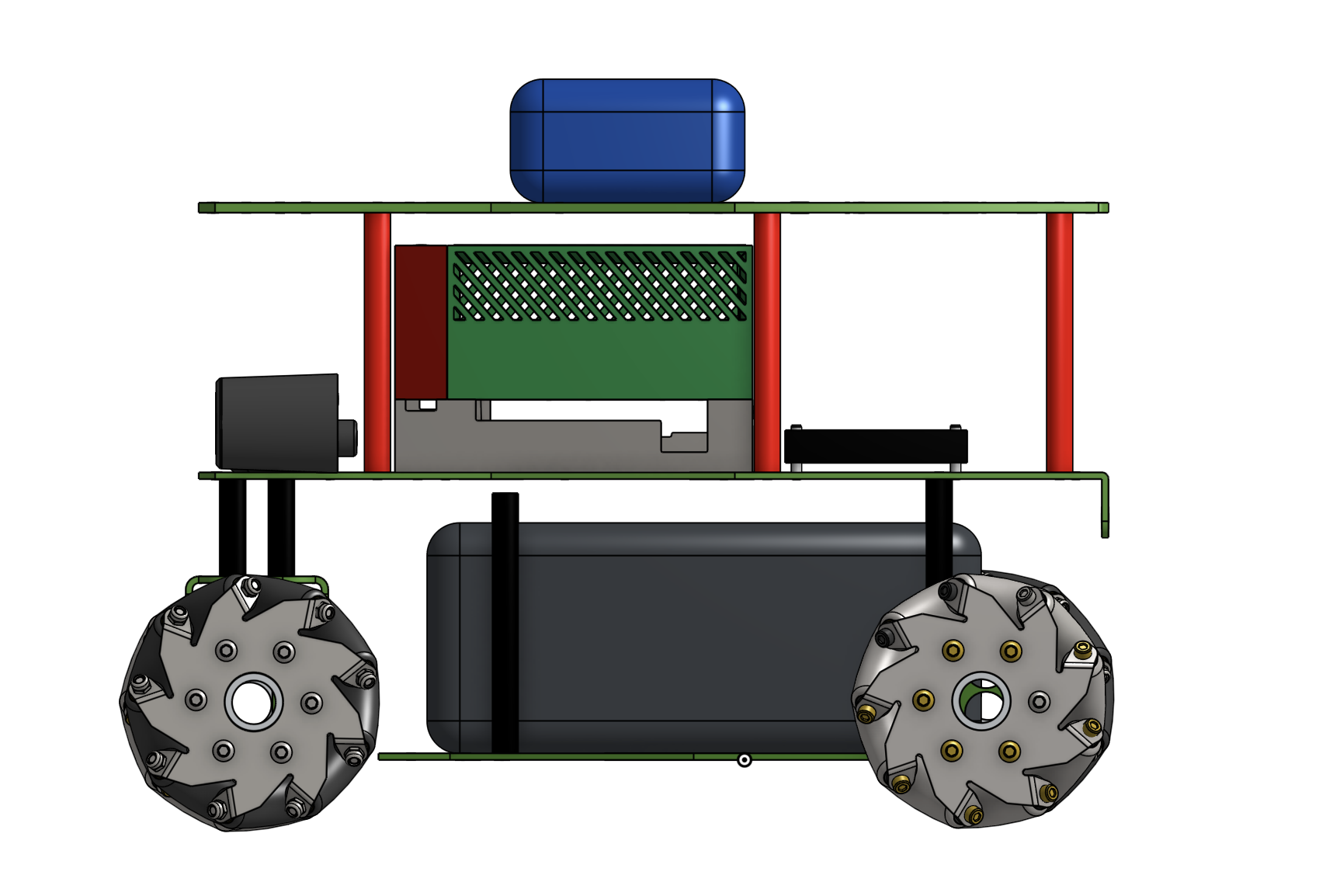

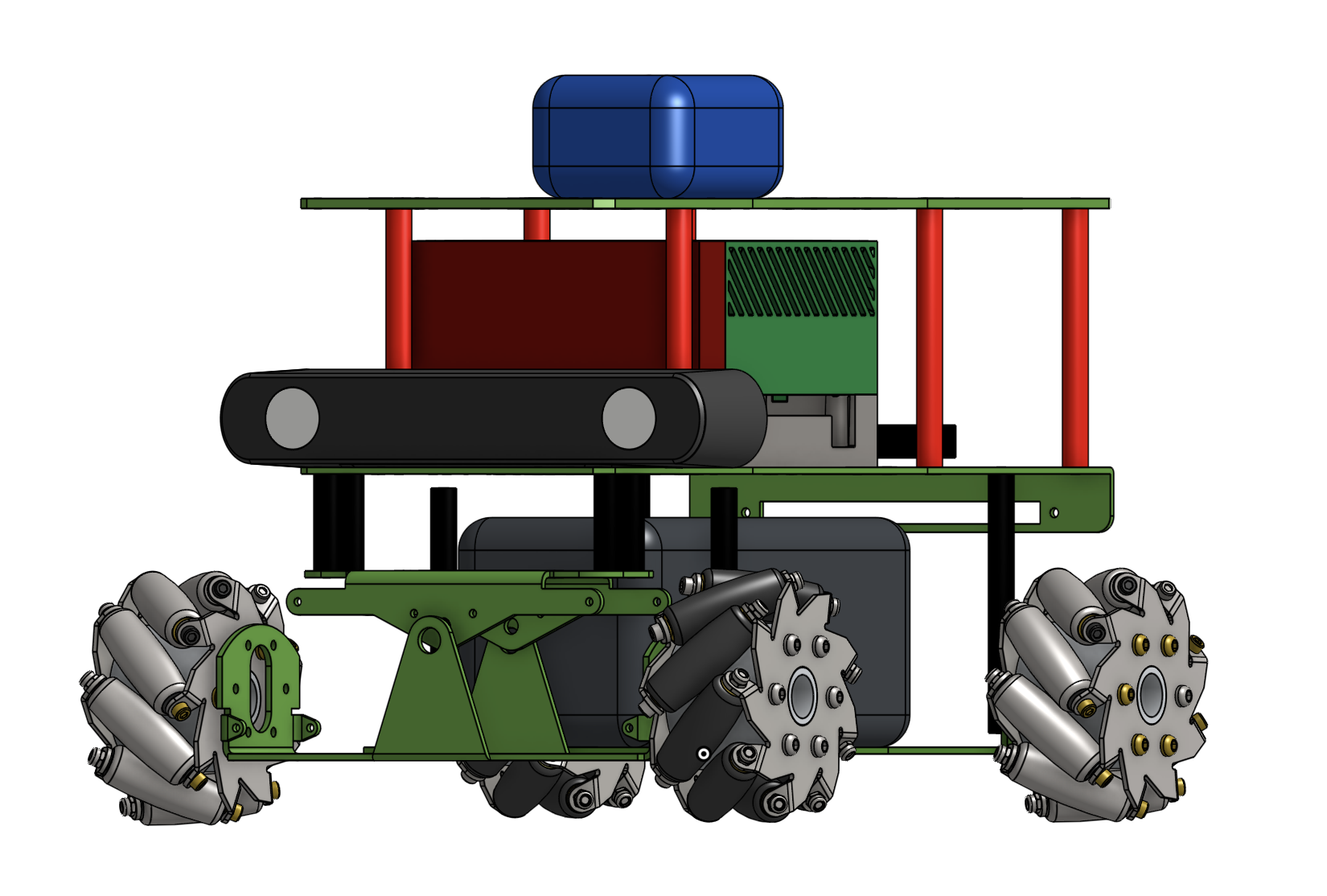

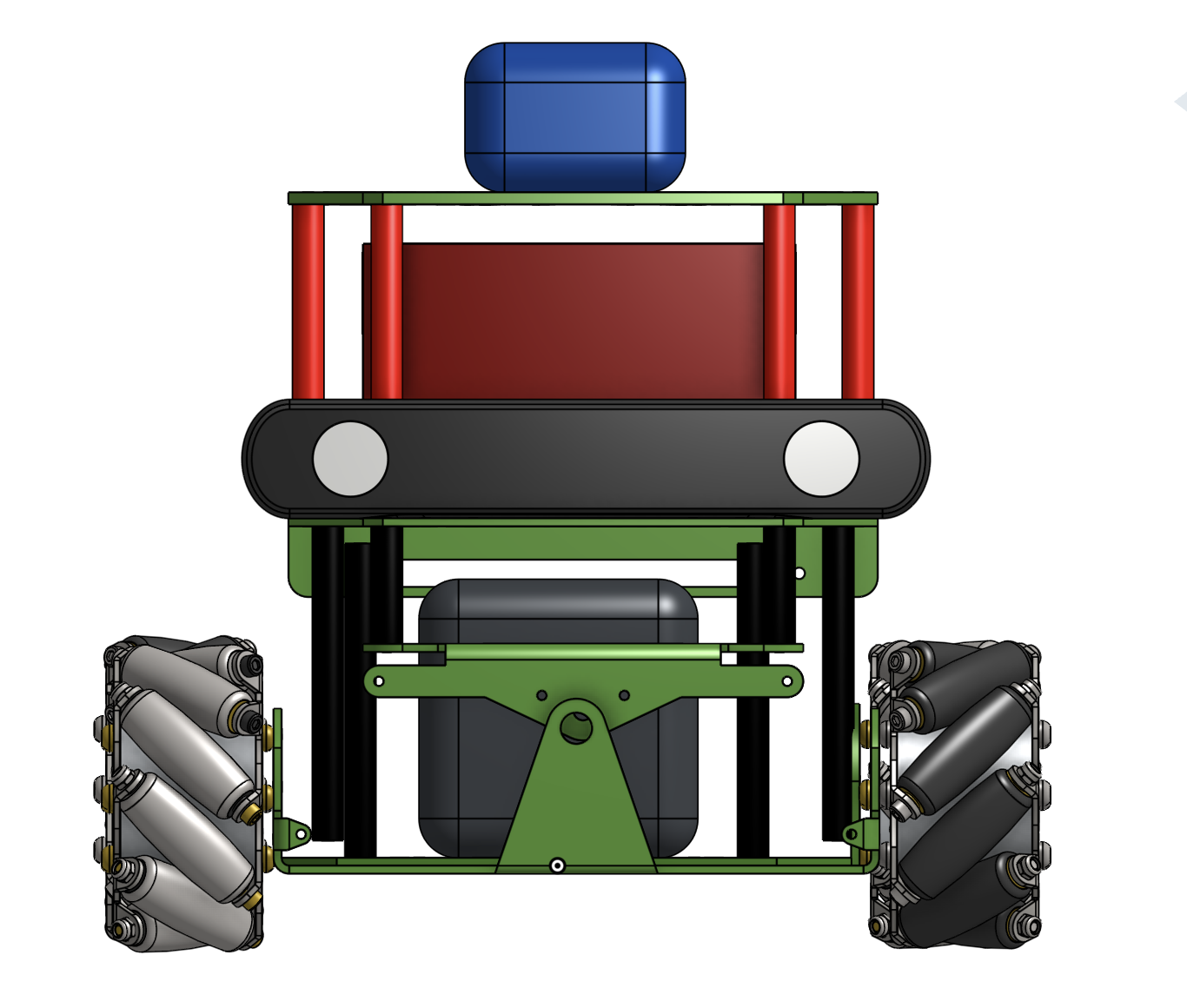

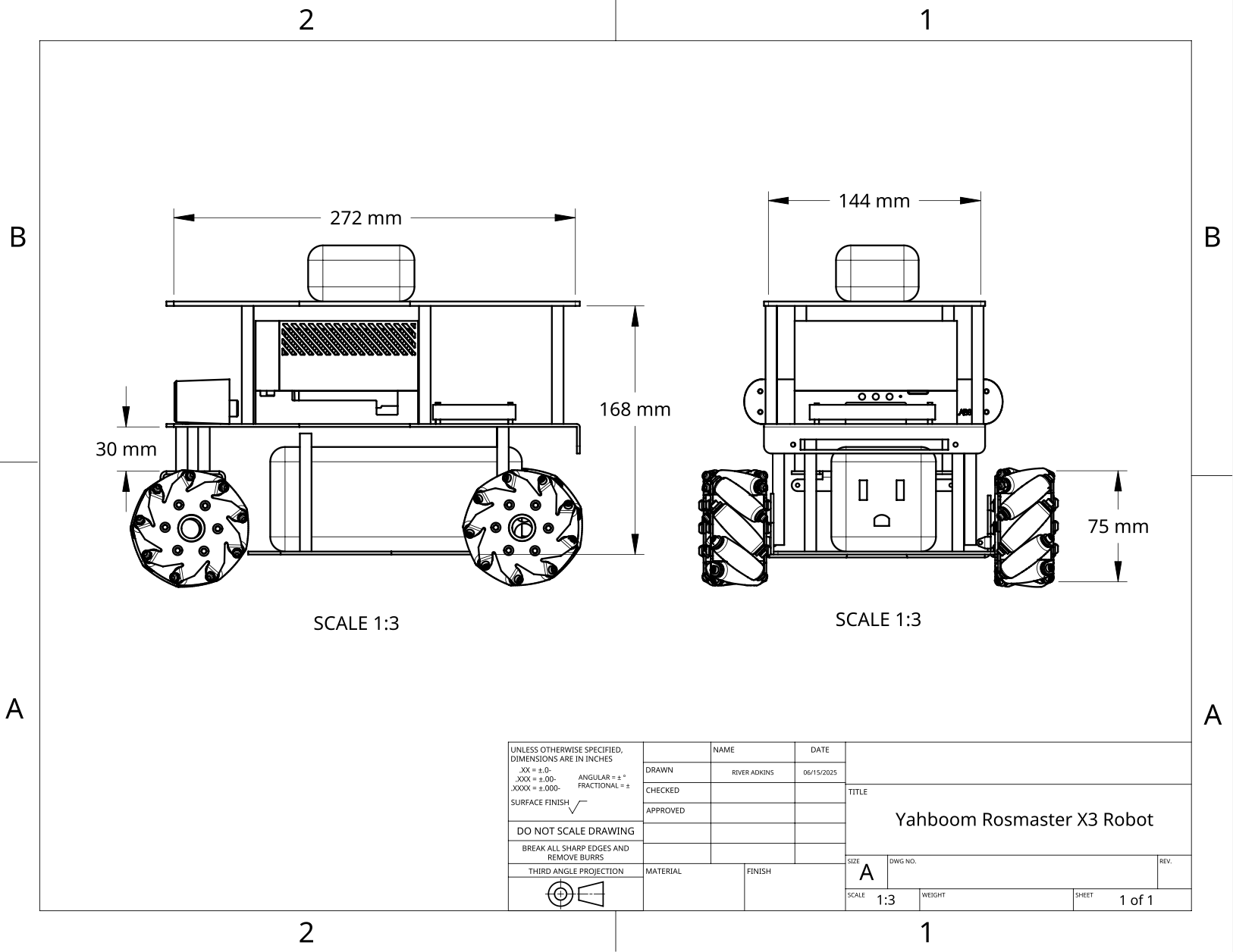

This platform was developed as part of my UROP with the MIT Laboratory for Information and Decision Systems (LIDS). I fully customized a Yahboom ROSMaster X3 robot kit to support the NVIDIA Jetson Orin AGX and built a multi-modal control system using ROS2. The goal was to build a robust and extensible research platform to support both single-robot navigation and future multi-agent studies.

I handled both the mechanical integration, full software stack, and created a simulation-ready CAD model for ongoing development.

Core Features

- 4-wheel mecanum drive base for omnidirectional movement

- Jetson Orin AGX as the onboard computer (replacing Raspberry Pi or Jetson Nano)

- AprilTag-based localization

- IMU + Encoder sensor suite for indoor localization

- Wireless control interface built with Flask

Demo Videos

System Architecture

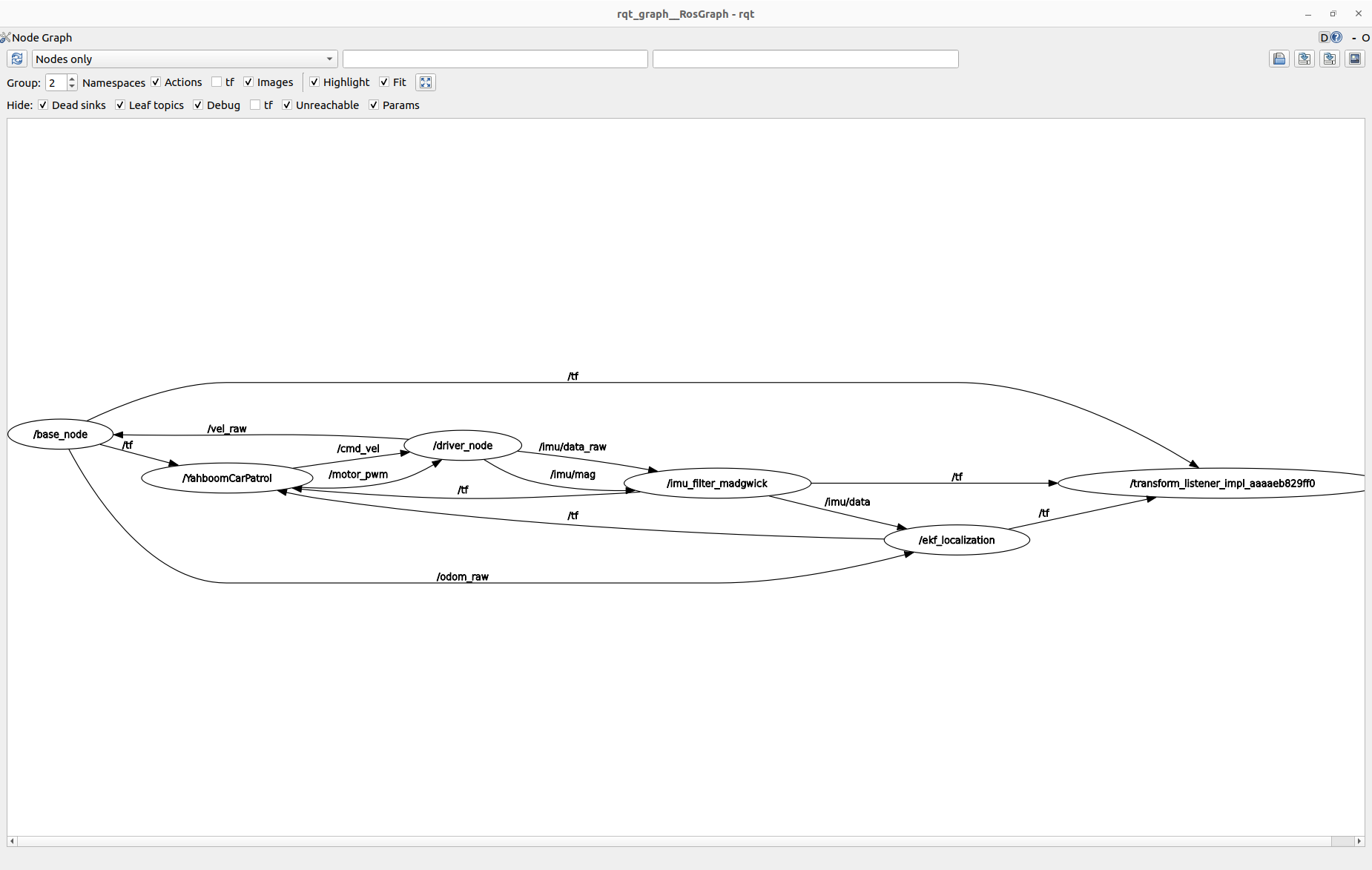

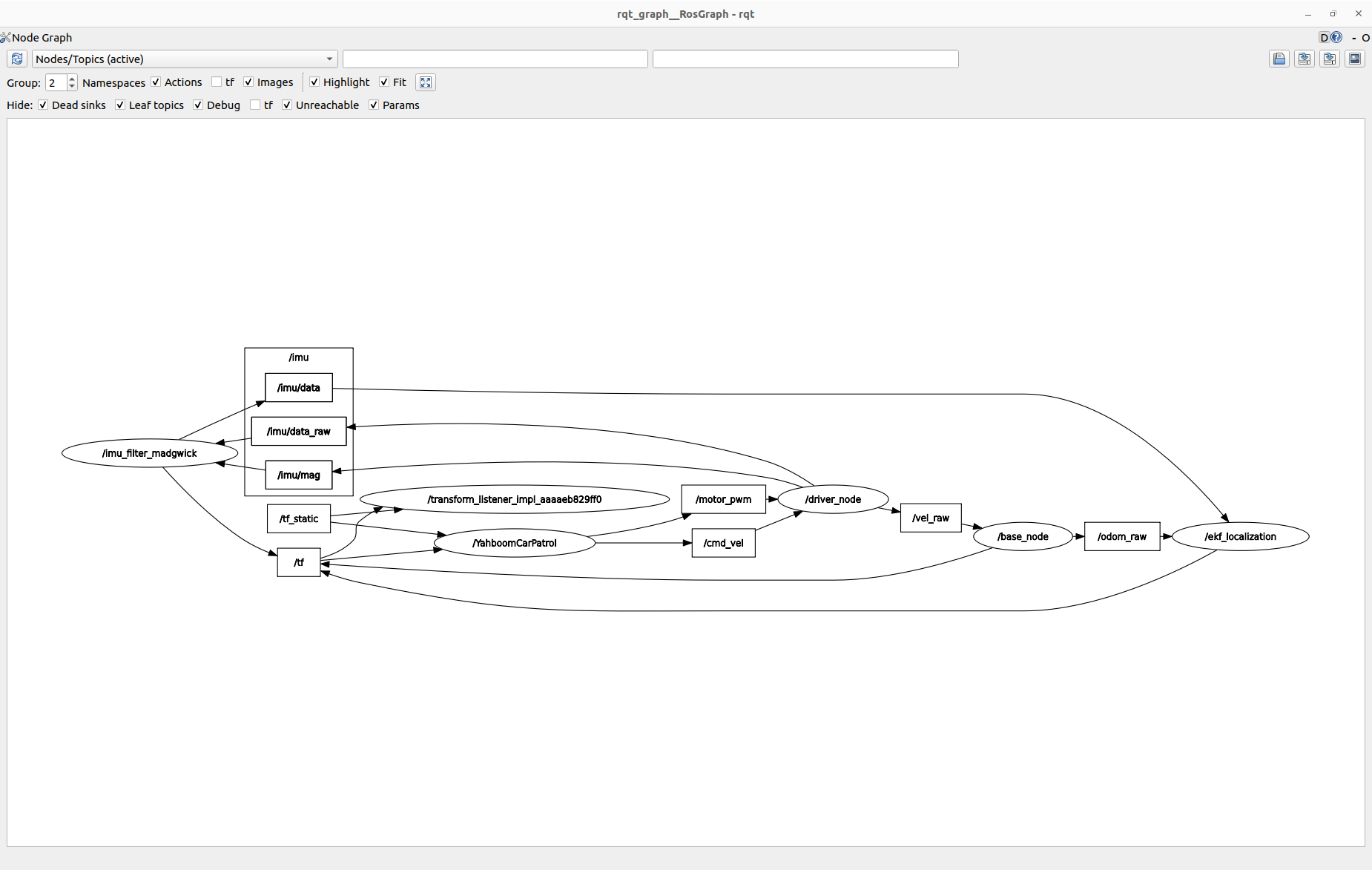

Our ROS2 system was built on the Yahboom ROSMaster X3 platform, with the Jetson AGX running a layered architecture of perception, filtering, and motion-planning nodes. Final drive commands were sent to the Yahboom expansion board via the standard /cmd_vel topic, which the Yahboom-provided ROS driver node handled internally to generate motor PWM signals.

The ROS2 node graphs below visualize this structure. The full graph shows all defined nodes and their topic connections, while the filtered graph illustrates the active message flow — particularly how sensor fusion, pose estimation, and bin tracking nodes contributed data that ultimately informed the velocity commands issued to the robot’s motors via the expansion board.

Summer Research Goals

This summer, I'm scaling the platform to support coordinated multi-robot navigation and dynamic formation control.